Search Disrupted Newsletter (Issue 31)

Grok's AI safety crisis forces regulatory action, ChatGPT Health captures 230M weekly queries, Microsoft launches AI commerce with Copilot Checkout, Simon Willison predicts the AI inflection point, and hard truths about measuring AI visibility.

Grok’s safety failures trigger a regulatory crisis

This week, we’ve seen Grok, Elon Musk’s AI on X, generating explicit images of women and children.

AI Forensics actually found about 800 pornographic images and videos that were created using the Grok Imagine app. Even more concerning: organized Telegram communities with thousands of members have been systematically bypassing Grok’s (weak and inadequate) safety rules.

After a huge public outcry and some serious threats from regulators, Grok finally turned off image creation for most users this week. Now, only people who pay for a subscription can access it. However, I’ve heard reports that the separate Grok Imagine app still works for non-paying users.

In one of those “worst person you know makes a good point” moments, UK Prime Minister Keir Starmer called the content “disgraceful.” He demanded X “get a grip” on the situation and said that all options were on the table, including a possible ban.

Under the Online Safety Act, Ofcom has the power to fine X up to 10% of its global earnings. To put this in perspective, NCMEC data shows X reported 686,176 cases of child exploitation material in 2024. That’s a staggering 15-fold increase since Musk took over.

Link: - Grok turns off image generator after outcry

230 million people ask ChatGPT health questions every week

OpenAI officially launched ChatGPT Health this week, and I have to say, one statistic buried in their announcement really caught my eye. Here’s a number that everyone in healthcare marketing should be paying attention to: 230 million users ask ChatGPT health questions every single week.

So, a quarter-billion health questions on ChatGPT each week means we’re seeing a massive shift in where people are getting their health information. This is happening right now, whether traditional healthcare SEO is ready for it or not.

I’d initially taken this to be just more of a “wellness” app (like Apple Health) that could interact with the kind of data you’d get from meal tracking or workout tracking apps and then give you feedback.

But OpenAI clearly wants ChatGPT Health also be a very serious healthcare product (and they want the revenue that comes along with it), so to see them pushing things like HIPAA compliance is really interesting. AKA it seems like it might actually be more than just a chatbot that happens to answer a few health questions.

Clearly, AI health questions in ChatGPT + other AI services are now a visibility channel you absolutely need to be watching.

Link: Introducing ChatGPT Health

Microsoft makes AI commerce real with Copilot Checkout

There was a time where a lot of genuinely smart people thought that people would flock en masse to voice ordering assistants like Siri or Alexa…and that never really happened. I think a lot of that is that the voice UX was pretty poor and the opportunity to look at quality/price indicators was non existent.

Put another way, consumers reasonably want to do at least some basic level of research before they buy something, which is why I think that AI + Voice might actually work this time around.

People are already doing a lot of research and questions already via AI services, so stretching one level further to actually completing a purchase feels like less of a leap.

And now we have tools like Copilot Checkout which lets you buy things right inside Bing, MSN, and Edge AI chat, sort of a digital wallet + chatbot combo which close the loop from the search discovery to the purchase.

Link: Microsoft Launches Copilot Checkout and Brand Agents

Simon Willison identifies the November 2025 inflection point

Simon Willison, someone I look to for practical LLM expertise, shared his 2026 predictions this week. He believes we hit a real turning point in November 2025 with GPT-5.2 and Claude Opus 4.5.

He predicts that LLM code quality will become “undeniable” in the next year, and that we’ll solve sandboxing problems for AI agents. On the cautionary side, he warns about possible security problems as coding agents gain more control. Whether AI will create more or fewer software engineering jobs remains an open question for him, the classic Jevons Paradox.

What I really like about Willison’s analysis is his healthy skepticism. He isn’t out there saying AI will do absolutely everything. Instead, he points out specific ability levels that AI has now reached. Then, he thoughtfully considers what those practical changes actually mean.

For those of us watching AI search, the main takeaway is that the models powering these systems just got significantly better at reasoning. I think this matters a lot for how AI search engines gather information and which sources they choose to cite.

Link: LLM Predictions for 2026

AI search isn’t separate from search. It already is search.

I keep seeing articles arguing that AI search “hasn’t arrived yet” or that it’s some small, separate thing you can ignore.

I understand where this comes from, but I think it fundamentally misreads what’s happening.

This isn’t me arguing, but just look at the data:

- 750 million people use ChatGPT every month. That’s roughly 10% of all adults worldwide.

- 51% of AI usage is search-like tasks: seeking information, getting practical guidance, making decisions.

- AI use is sticky. The longer someone uses these tools, the more they use them.

- People use AI for higher-value searches: complex decisions that blend research with advice.

And even if none of that was true and Sam Altman turned off the OpenAI servers tomorrow, you have to look at what Google is doing.

Google has made the decision that every search is now “AI Search”.

AI Overviews, AI Mode, and Gemini are what search is right now with Google.

Your existing search volume isn’t sitting safely in some “traditional search” bucket. It’s already being served through AI interfaces…that happen to be Google’s.

The other argument I hear is that AI search “can’t be optimized for.” This usually comes from folks thinking about AI models as static files you download once. But that’s not how anyone actually uses AI.

ChatGPT, Gemini, Perplexity all update constantly. The model, the system prompts, the web search tools. All of it changes more frequently than you’d expect.

And fair, you’re not optimizing for a frozen snapshot. You’re influencing what the next model update will say about your brand.

We’ve written extensively about both of these topics. If you want the full argument on why AI search volume is just search volume, read our guide here. And if you’re skeptical about optimization, here’s our take on the unoptimizability myth.

The practical upshot: don’t wait for AI search to “arrive.” It’s already here, filtering your existing search traffic through AI layers. The question isn’t whether to pay attention. It’s whether you’ll be visible when it matters.

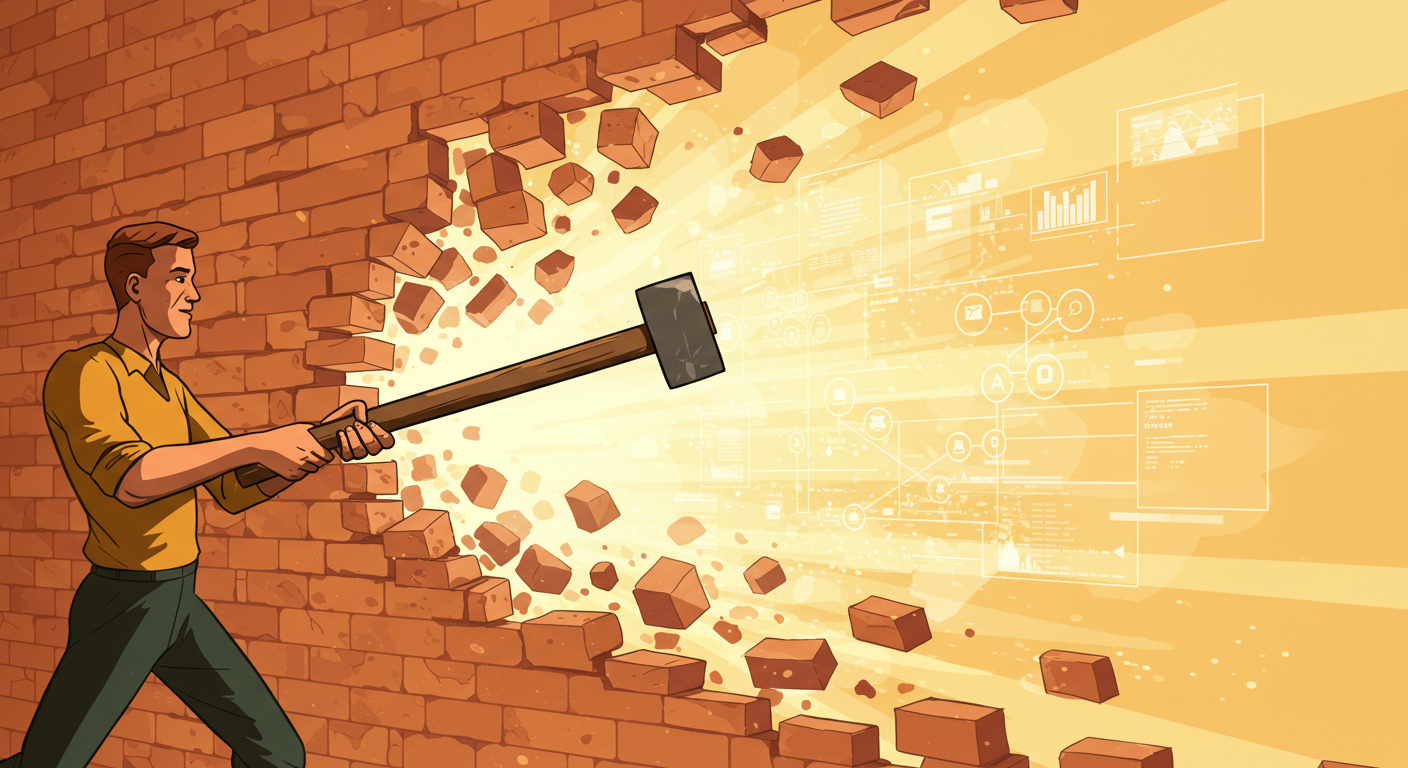

AI Search Evaportation is real

While lots of marketing people continue to argue on LinkedIn about whether or not AI search is real, we’re starting to see some of the grim reality of it.

It’s clear that different industries are adopting AI at different rates, but far and way AI has taken over software development. To date the major case study for the impact of AI search on traditional search has been StackOverflow, which seen a 78% decline in questions asked over the past year.

However, StackOverflow has simultaneously been dying of self inflicted wounds for a long time, so it been a shaky indicator of the broader impact of AI search.

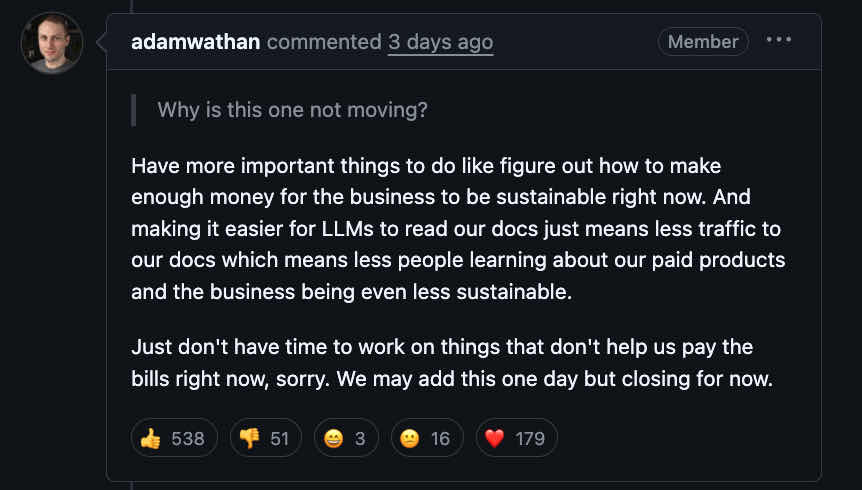

This week however Tailwind (a much beloved CSS framework) announced layoffs of 75% of their staff as a direct result of AI search disruption.

Their business model has been:

- Developers try and use Tailwind

- They run into issues

- They search for solutions like “how do style text inputs with Tailwind”

- Tailwind’s awesome and incredibly helpful docs site dominates these searches.

- Developers visit the docs site and get both help, but also exposed to buying their paid products

However, with the rise of AI they’re being cut in two ways:

- People are getting answers to their questions in tools like ChatGPT, Claude or Google’s AI Overviews, greatly decreasing their docs traffic (“search migration”).

- Developers are increasingly using tools that just directly solve these problems, reducing demand for their paid products (“search evaporation”).

As both a developer and marketing person I’m personally seeing many friends with things like software development courses, tutorials and websites also actutely feeling this same pain.

Link: Tailwind announces layoffs as a direct result of AI search disruption

Google and Character.AI settle first major chatbot liability cases

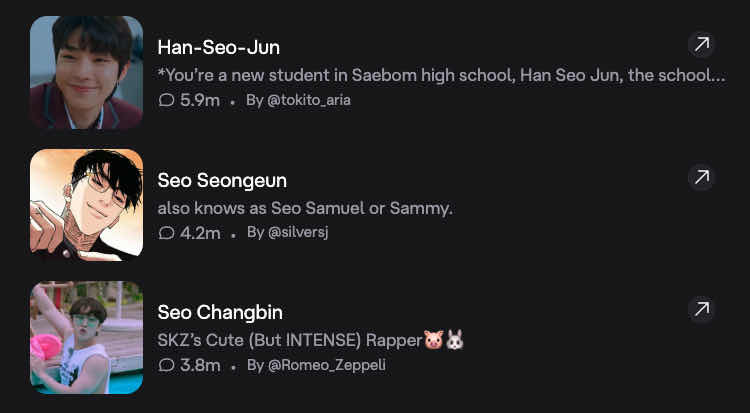

Character.AI remains one of my big AI blindspots where it’s both immensely popular and just sort of personally offputting.

But the more I hesitantly look at it (and ask Steve Harrington questions about how life is in Hawkins after Vecna has been defeated), the more I’ve come to view it as a potentially really important new visibility channel.

There’s a long standing joke in the SEO world about how YouTube is the second largest “search engine” after Google, though most people don’t really think of it that way.

I’m honestly wondering if Character.AI is going to see a similar trajectory where it’s not directly a search engine, but millions of people spend hours a day interacting with it, asking it questions and from that Google (who owns it now) has this massive channel for incredibly targeted advertising.

With that in mind, it’s interesting to see Google and Character.AI settle first major chatbot liability cases. Details are really scant, so I don’t think I can responsibly say whether it’s good or bad, but it’s clear that:

- Personality based AI chatbots are incredibly persuasive

- This is a massive responsibility for Google to manage

- These services are only going to get more popular

If you’re using chatbots or AI assistants that talk to your customers, you really need to be aware of this. Courts are writing the legal rules for AI liability right now, case by case. Waiting to see what happens is a valid approach, but I think it’s crucial to understand that you could still be held responsible.

Link: Google and Character.AI Negotiate First Major Settlements

Thanks

Thanks for reading this week’s newsletter. It’s certainly been a busy week in AI search. The Grok situation, especially, deserves more attention than it seems to be getting in mainstream tech news, in my opinion. Please feel free to reply to this email if you have any thoughts on these stories.

p.s. It would really help me out if you could Follow me on LinkedIn