What are AI hallucinations?

Unlike traditional search engines that retrieve and link to existing content, AI systems generate responses in part by predicting what text should come next based on patterns in their training data.

Sometimes these are spookily accurate, and sometimes they're wildly off base.

This guide covers why hallucinations occur, why they matter for your brand, and how to detect and correct them.

This is important as AI systems are definitely "talking" to your prospects about your products, pricing, features, and company history.

Related: Learn more about visibility in AI search and why it matters for your brand.

"ChatGPT is now the most popular and least well-trained representative of our company."

What are the dangers of AI hallucinations?

AI hallucinations can have some serious consequences for your brand:

AI Search Journeys

More and more people are using AI for the entirety of their search journey, either making a series of queries within the same AI service or using a research agent like Google’s AI Mode to do the heavy lifting of visiting sites, qualifying the content, and filtering the results.

Reputation Damage

Hallucinated negative information—incorrect controversy details, fabricated scandals, or false business practices—damages your reputation without any factual basis.

Related: Learn how to monitor and improve AI search sentiment for your brand.

What makes brands vulnerable to hallucinations?

Many websites are heavy on top-of-funnel marketing content that draws people in, but doesn’t get to the details of directly addressing sales objections, pricing, and other potentially disqualifying information.

This top-heaviness makes brands more susceptible to hallucinations, as they haven’t laid a content foundation that addresses the sales objections and questions prospects have.

Producing too much top-of-funnel content and not enough bottom-of-funnel content can make your brand vulnerable to hallucinations.

Example: In the US, healthcare providers are required to comply with HIPAA regulations, so this is often the first thing prospects look for when evaluating SaaS companies. “Give me a list of email newsletter services that are HIPAA compliant”. Making it vitally important that AI services actually know the proper answer to this question and don’t hallucinate a response.

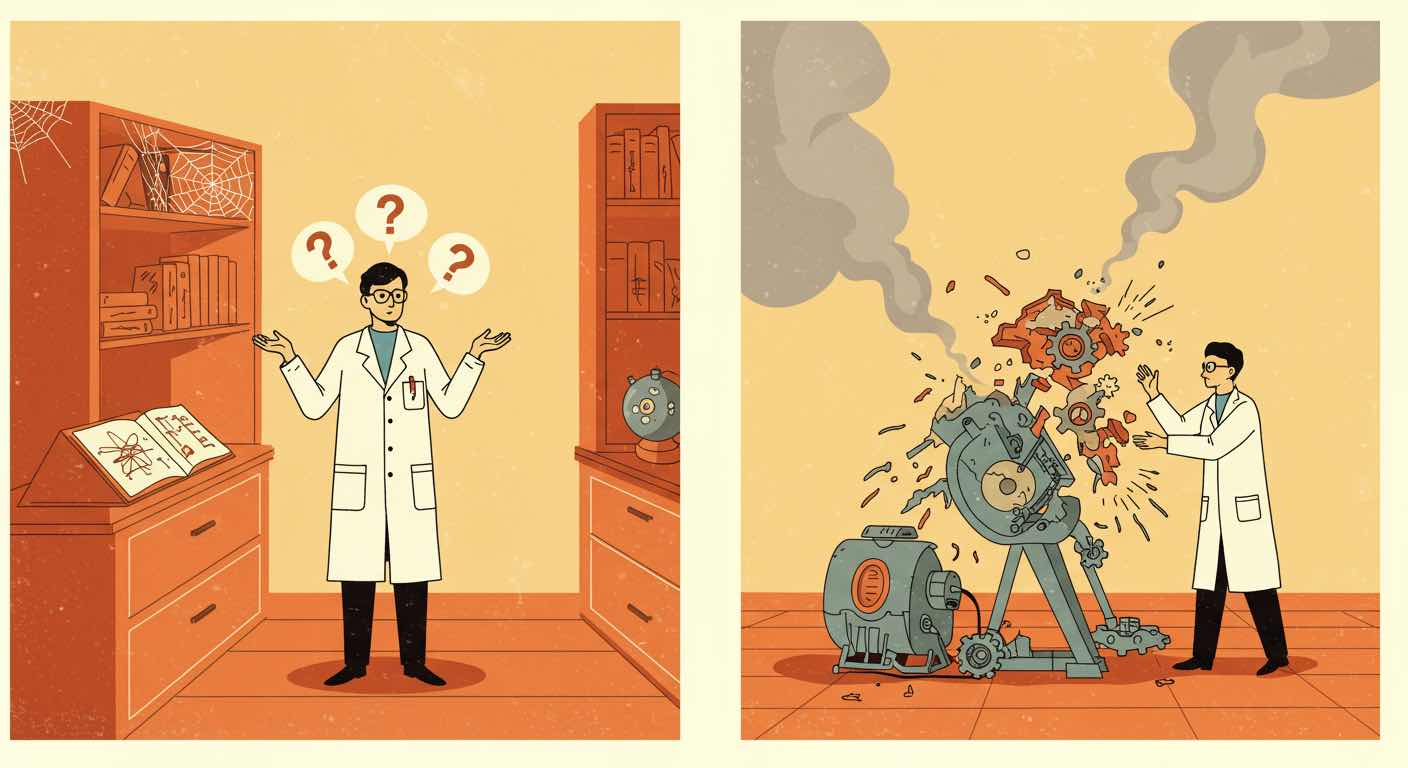

The two types of AI hallucinations

Hallucinations in AI models generally take two forms:

1. Ignorance-based hallucinations

The AI model responds with a (to it) “reasonable” extrapolation of the data it’s been given.

A simple lack of information is the most common cause of hallucinations. Which is good news for marketers, as it's an easy fix: create new content that addresses the hallucinating response.

By providing real information into these gaps. You can short-circuit the "guesstimating" that the AI would otherwise create.

2. Logic-based hallucinations

Logic-based hallucinations are caused by a flaw in the underlying reasoning (“thinking”) of the AI model.

As marketers, we can't really do much about these as they're a result of the models underlying training.

How do you detect hallucinations about your brand?

From evaluating millions of AI responses, we’ve built the following list of questions that we’d encourage you to test your brand with.

You can use Knowatoa to run this across all the different AI services for comprehensive detection, but to just quickly check for issues, you can also manually throw these into ChatGPT, Claude, Perplexity, and Gemini.

Copy the following

How to create hallucination-resistant content

Structure content to minimize hallucination risk:

Explicit statements: Use clear, direct statements. "We do not offer [feature]" is harder to hallucinate than "omission."

Structured data: Use schema markup, structured formats, and consistent terminology to make information unambiguous.

FAQs: Address common questions explicitly. If AI hallucinates specific details repeatedly, create authoritative answers.

Related Guide: Learn how truthfulness and factual accuracy affect AI search rankings → Truthfulness - Eliminate AI Hallucinations